So, there's these folks that call themselves "rationalists". You may

have seen them online. They refer to "Bayes" a lot, run paid

"rationality workshops", collect money to save the world from skynet,

and the like. You might begin to wonder, what do they actually know

about rationality?

Generally, the truth value of the statements they'd make is a pretty

elusive matter - they focus on un-testable propositions such

as which interpretation of quantum mechanics is "obviously correct" if

you don't know enough of it to even compute atomic orbitals, whenever future

super-intelligence is super enough to restore your consciousness from a frozen brain,

or how many lives you save by giving them a dollar.

But once in a blue moon they get bold and make well defined statements.

Being Half Rational About Pascal's Wager is Even Worse is one of such pieces where the rationalism can actually be tested against facts.

Listen to this:

For example. At one critical junction in history, Leo Szilard, the

first physicist to see the possibility of fission chain reactions and

hence practical nuclear weapons, was trying to persuade Enrico Fermi to

take the issue seriously, in the company of a more prestigious friend,

Isidor Rabi:

I said to him: "Did you talk to Fermi?"

Rabi said, "Yes, I did." I said, "What did Fermi say?" Rabi said,

"Fermi said 'Nuts!'" So I said, "Why did he say 'Nuts!'?" and Rabi

said, "Well, I don't know, but he is in and we can ask him." So we went

over to Fermi's office, and Rabi said to Fermi, "Look, Fermi, I told you

what Szilard thought and you said ‘Nuts!' and Szilard wants to know why

you said ‘Nuts!'" So Fermi said, "Well… there is the remote possibility

that neutrons may be emitted in the fission of uranium and then of

course perhaps a chain reaction can be made." Rabi said, "What do you

mean by ‘remote possibility'?" and Fermi said, "Well, ten per cent."

Rabi said, "Ten per cent is not a remote possibility if it means that we

may die of it. If I have pneumonia and the doctor tells me that there

is a remote possibility that I might die, and it's ten percent, I get

excited about it." (Quoted in 'The Making of the Atomic Bomb' by

Richard Rhodes.)

This might look at first like a successful application of "multiplying a

low probability by a high impact", but I would reject that this was really going

on. Where the heck did Fermi get that 10% figure for his 'remote

possibility', especially considering that fission chain reactions did in fact turn

out to be possible? If some sort of reasoning had told us that a

fission chain reaction was improbable, then after it turned out to be

reality, good procedure would have us go back and check our reasoning to

see what went wrong, and figure out how to adjust our way of thinking

so as to not make the same mistake again. So far as I know, there

was no physical reason whatsoever to think a fission chain reaction was

only a ten percent probability. They had not been demonstrated

experimentally, to be sure; but they were still the default projection

from what was already known. If you'd been told in the 1930s that

fission chain reactions were impossible, you would've been told

something that implied new physical facts unknown to current science

(and indeed, no such facts existed).

It almost looks like he's trying to paint in your mind a picture: Enrico Fermi knows that fission happens, but Fermi is too irrational or dull to conclude that chain reaction is possible. Other one of these self proclaimed rational

super-geniuses made similar assumptions

at one of those "rationality workshops". Ohh Fermi wasn't stupid, he was just stuck in that irrational thinking which we'll teach you to avoid.

Easy picture to paint when nuclear fission is associated with chain reaction, but no. Just no.

What Fermi did know was that nuclei can be fissioned by neutrons, meaning, that

when neutron hits heavy nuclei, they *sometimes* split in two roughly

equal pieces (sometimes they even split into three pieces, and often do

not split at all). Rarely, I must add, because they were doing

experiments with natural uranium which is mostly uranium 238, or

U238, and U238 tends to absorb neutrons without splitting.

What was entirely unknown at the time of Fermi's estimate,

is that any neutrons get emitted immediately as the nucleus splits (or

even after), that it is enough neutrons to sustain the chain reaction

(you need substantially more than 1 because neutrons also get captured

without fissioning), and that they have the right energy.

So, fission does not logically imply there are neutrons emitted, and

even if there are neutrons emitted, you need a complicated, quantitative calculation to see if

there will be a self sustaining chain reaction or not. Many of the more stable nuclei can be fissioned, but can not sustain chain reaction; for example, U238.

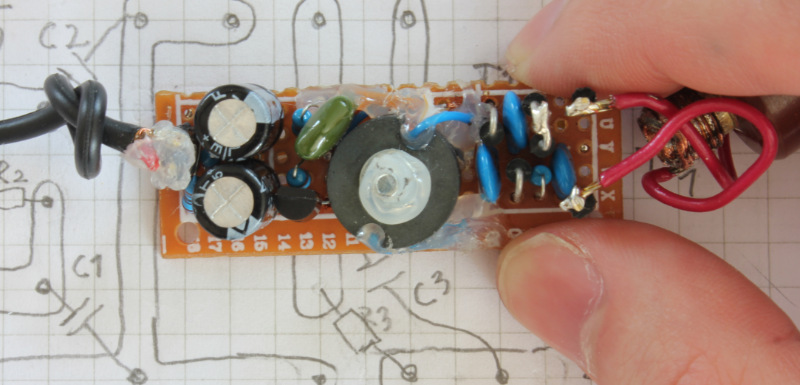

What Fermi and Szilard did first was to do

quantitative measurements and find out if there are secondary neutrons,

how many on average, and of what energy, and how often are they absorbed before causing fission. Which was very difficult as all

evidence is very indirect and many calculations must be made to get

from what you measure to what happens.

When they actually did the necessary measurements and learned the

facts from which to conclude possibility of a chain reaction without new

facts, Fermi did the relevant calculations and concluded that

chain reaction was possible, with probability fairly close to 1. He

proceeded to do the calculations to find the size of, and build, a

nuclear reactor, with quite clever safety system, with reasonable

expectation that it will work (and reasonable precautions about any yet

unknown positive feedback effects).

Contrary to the impression you might get from reading Yudkowsky, once

the quantitative measurements actually implied the possibility of a self

sustaining chain reaction unless there's some new facts, Fermi of

course assigned fairly high probability to it. Extrapolation from known

facts was never an issue.

You're welcome to research the story of Chicago Pile 1 and check for yourself.

Case closed as far as Fermi goes. Now on to learning the lessons from

someone being very wrong, this someone not being Enrico Fermi.

Was that historical info some esoteric knowledge which is hard to hunt down? No, it is apparently explained even in the

same book that Yudkowsky is quoting :

Fermi was not misleading Szilard. It was easy to estimate the explosive

force of a quantity of uranium, as Fermi would do standing at his office

window overlooking Manhattan, if fission proceeded automatically from

mere assembly of the material; even journalists had managed that simple

calculation. But such obviously was not the case for uranium in its

natural form, or the substance would long ago have ceased to exist on

earth. However energetically interesting a reaction, fission by itself

was merely a laboratory curiosity. Only if it released secondary

neutrons, and those in sufficient quantity to initiate and sustain a

chain reaction, would it serve for anything more. "Nothing known then,"

writes Herbert Anderson, Fermi's young partner in experiment,

"guaranteed the emission of neutrons. Neutron emission had to be

observed experimentally and measured quantitatively." No such work had

yet been done. It was, in fact, the new work Fermi had proposed to

Anderson immediately upon returning from Washington. Which meant to

Fermi that talk of developing fission into a weapon of war was absurdly

premature.

as well as in many other books. They didn't read it. Lesson: don't write about things you do not know.

What did they read instead to draw their conclusions from? Another quote from Yudkowsky:

After reading enough historical instances of famous scientists

dismissing things as impossible when there was no physical logic to say

that it was even improbable, one cynically suspects that some

prestigious scientists perhaps came to conceive of themselves as senior

people who ought to be skeptical about things, and that Fermi was just

reacting emotionally. The lesson I draw from this historical case is

not that it's a good idea to go around multiplying ten percent

probabilities by large impacts, but that Fermi should not have pulled

out a number as low as ten percent.

Lesson: Don't do that. Don't scan for instances of scientists being

wrong, without learning much else. If you want to learn rationality,

learn actual science. This whole fission business was such a mess. It is

a true miracle they managed to unravel everything based on very

indirect evidence, in such a short time. There is a lot to learn here.

Also, probability is an elusive matter. If there

is a self sustaining chain reaction, he's "wrong" for assigning 10%, 90% would be closer,

and 99% would be closer still. If there is no self sustaining chain reaction, he's

"wrong" for assigning 10% rather than 0.1% . If someone said that the

probability of die rolling 1 is 1/6 , rolling 2 is 1/6 , and so on,

after die has been looked at, they're 6 times wrong.

But the important thing in probability is that on average 1 out of 10 times that

Fermi assigns something 10% probability, the matter should turn out to

be true.

Ohh, and on the main enterprise of saving the world: this attitude is precisely the sort of thing that shouldn't be present. If you grossly underestimate even Fermi, and grossly overestimate how much you can understand about such topic based on a very cursory reading, well, your evaluation of the living people, and active subjects, is not going to be any better.